Sindice Blog

-

Farewell to Giovanni and Renaud

It is with sadness that the Sindice team here at Insight say goodbye to two of the original founders of Sindice.com, Dr. Giovanni Tummarello and Dr. Renaud Delbru. Renauld and Giovanni are with the project since it’s inception and have been crucial for Sindice to become a showcase for what is possible using semantic web technologies tying in and connecting with many other researchers in DERI and later in Insight.

Giovanni and Renaud were leading members of the Sindice team from the original Sindice paper, to a platform that could ingest 100 million documents a day. Along the way they have collaborated with other DERIans such as Eyal Oren, Richard Cyganiak (and many others) on Sindice.com, spinning-off a top level Apache project, a popular Lucene/Solr plugin and a number of very successful papers.

Giovanni and Renaud are now moving towards commercialisation, hoping to repeat their many successes at DERI/Insight/NUIG in Industry. They will be focusing their energy full time with the startup SindiceTech, which has licensed some of DERI’s technologies from NUI Galway.

For Sindice.com this is the closing of one chapter and the starting of a new one. We are busy preparing a new look for the website to showcase recent and ongoing Sindice/Insight research, and we are moving the sindice.com newsgroup to the simpler.

So we say goodbye to Giovanni and Renaud, who will stay in Galway with SindiceTech and we wish them good luck and every success with their new venture from all the team here at Insight and at NUI Galway. We hope we will have the opportunity to work with Giovanni and Renaud via SindiceTech in the future.

-

The SIREn 1.0 Open Source Release and its Use in the Semantic Web Community

We are happy to announce the availability of SIREn 1.0 under the Apache License Version 2.0. SIREn is the information retrieval engine that has been powering Sindice.com these past years. SIREn has been developed as a plugin for Apache Lucene and Apache Solr to enable efficient indexing and searching of arbitrary structured documents, e.g., JSON or XML. While it does not match the RDF data model (graph vs tree) and therefore it is NOT a SPARQL endpoint replacement, it can be used in many interesting ways by the Linked Data / Semantic Web community. We explain below how to index and search RDF graphs using the JSON-LD format.

SIREn is available for download at this homepage and the source code is available on github.

Acknowledgements for this release must go to the European FP7 LOD2 project of which SIREn is a deliverable, and to the Irish Research Council for Science, Engineering and Technology which has supported this project.

Indexing and Searching JSON-LD with SIREn

SIREn’s API is based on the JSON data format, and therefore it is compatible with the JSON-LD syntax, a JSON-based format to serialise Linked Data. In order to leverage the full power of SIREn and be able to search not only on text and entities, but also on relations between entities, one can use the JSON-LD Framing to easily map a graph to a tree structure. Mature libraries in various programming environments (see here for a list of librairies) are available to export RDF data into the JSON-LD format.

With this set of tools, one could transform the following N-Triple document about an online bike shop:

<http://store.example.com/> <http://ns.example.com/store#product> <http://store.example.com/products/links-speedy-lube> . <http://store.example.com/> <http://ns.example.com/store#product> <http://store.example.com/products/links-swift-chain> . <http://store.example.com/> <http://purl.org/dc/terms/description> "The most \"linked\" bike store on earth!" . <http://store.example.com/> <http://purl.org/dc/terms/title> "Links Bike Shop" . <http://store.example.com/> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://ns.example.com/store#Store> . <http://store.example.com/products/links-speedy-lube> <http://ns.example.com/store#category> <http://store.example.com/category/chains> . <http://store.example.com/products/links-speedy-lube> <http://ns.example.com/store#category> <http://store.example.com/category/lube> . <http://store.example.com/products/links-speedy-lube> <http://ns.example.com/store#price> "5"^^<http://www.w3.org/2001/XMLSchema#integer> . <http://store.example.com/products/links-speedy-lube> <http://ns.example.com/store#stock> "20"^^<http://www.w3.org/2001/XMLSchema#integer> . <http://store.example.com/products/links-speedy-lube> <http://purl.org/dc/terms/description> "Lubricant for your chain links." . <http://store.example.com/products/links-speedy-lube> <http://purl.org/dc/terms/title> "Links Speedy Lube" . <http://store.example.com/products/links-speedy-lube> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://ns.example.com/store#Product> . <http://store.example.com/products/links-swift-chain> <http://ns.example.com/store#category> <http://store.example.com/category/chains> . <http://store.example.com/products/links-swift-chain> <http://ns.example.com/store#category> <http://store.example.com/category/parts> . <http://store.example.com/products/links-swift-chain> <http://ns.example.com/store#price> "10"^^<http://www.w3.org/2001/XMLSchema#integer> . <http://store.example.com/products/links-swift-chain> <http://ns.example.com/store#stock> "10"^^<http://www.w3.org/2001/XMLSchema#integer> . <http://store.example.com/products/links-swift-chain> <http://purl.org/dc/terms/description> "A fine chain with many links." . <http://store.example.com/products/links-swift-chain> <http://purl.org/dc/terms/title> "Links Swift Chain" . <http://store.example.com/products/links-swift-chain> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://ns.example.com/store#Product> .

into a JSON-LD document (the ‘@context’ part has been removed for readability):

{ "@id": "http://store.example.com/", "@type": "Store", "name": "Links Bike Shop", "description": "The most \"linked\" bike store on earth!", "product": [ { "@id": "p:links-swift-chain", "@type": "Product", "name": "Links Swift Chain", "description": "A fine chain with many links.", "category": ["cat:parts", "cat:chains"], "price": 10.00, "stock": 10 }, { "@id": "p:links-speedy-lube", "@type": "Product", "name": "Links Speedy Lube", "description": "Lubricant for your chain links.", "category": ["cat:lube", "cat:chains"], "price": 5.00, "stock": 20 } ] }and then index it as a SIREn’s document, and be able to search bike shops based on their products, e.g., find all shops selling chains with a price inferior to 20:

(@type : Store) AND (product : { category : chains, price : int([* TO 20]) })About Other Sindice Open Source Releases

This release follows the release of Sig.ma as open source and, more notably, our RDF extraction library Any23 (now a top level apache project).

We are currently requesting permission to release other components of Sindice, as we believe this would be quite beneficial to the community. These include the assisted sparql query editor, powered by the RDF graph summary. We hope we’ll be able to return on this soon.

-

How we ingested 100M semantic documents in a day (And where do they come from)

How to get some unexpected big data satisfaction.

First: build an infrastructure to process millions of document documents. Instead of just doing it home-brew, however, do your big data homework, no shortcuts. Second: unclog some long standing clogged pipe

The feeling is that of “it all makes sense” and it happened to us the other day when we started the dataset indexing pipeline with a queue of a dozen of large datasets (see later for the explanation). After doing that, we just sit back and watched the Sindice infrastructure, that usually takes 1-2 million documents per day, reason and index 50-100 times as much in the same timeframe, no sweats.

The outcome showing on the homepage shortly after:

But what is exactly this “Dataset Pipeline” ?

Well its simply a by dataset approach.. to ingest datasets.

In other words, We decided to do away with basic URL to URL crawling for the LOD cloud websites and instead are taking a dataset by dataset approach, choosing just datasets we consider value to index.

This unfortunately, is a bit of a manual work. Yes, it is sad that LOD (which is an initiative that is born to make data more accessible) hasn’t really succeded at adoption of a way to collect the “deep semantic web” in the same way that a simple sitemap.org initiative has for the normal web. But that is the case.

So what we do now in terms of data acquisition is:- For normal websites that expose structured data (RDF, RDFa, Microformats, Schema etc etc), we support Sitemaps. Just submit it and you’ll see it indexed in Sindice. For these web sites we can be in sync (24h delay or less) e,g, just expose a simple sitemap “lastmod” field and we’ll get your latest data,

- For RDF datasets (Eg. LOD) you have to ask and send us a pointer to the dump:) . And we’ll add you to a manual watchlist and possibly to the list of those that also make it to SPARQL. Refresh can be scheduled at regular intervals

Which brings us to the next question: which data makes it to the homepage search and which to the Sparql endpoint? is it the same?No its not the same.The frontpage search of Sindice is based on Siren indexes all (including the full materialization of the reasoning). This index is also updated multiple times a day e.g. in response to pings or sitemap updates. As a downside, while our frontpage advanced search (plus the cache api ) can be certainly enough to implement some very cool apps (see http://sig.ma based on a combination of these APIs), they certainly won’t suffice to answer all the possible questions.The sparql endpoint on the other hand has full capabilities but only containes a selected subset of the data in Sindice. The SPARQL sindice datasets consists of the first few hundeds websites (in terms of ranking) - see a list here - and, at the moment, the following RDF LOD datasets:dbpedia , triples= 698.420.083europeana ,triples= 117.118.947cordis , 7.107.144eures , triples= 7.101.481ookaboo, triples= 51.088.319geonames, triples= 114.390.681worldbank , triples= 87.351.631omim, triples= 358.222sider triples= 18.884.280goa triples= 11.008.490chembl triples= 137.449.790 (and by the way also see hcls.sindicetech.com for bioscience data and assisted tools)ctd triples= 103.354.444drugbank triples= 456.112dblp triples= 81.986.947

There is however some “impedence” mismatch between the Siren and SPARQL approach. E.g. Siren is a document index (e.g. in Sindice we arbitrarely limit the size of the documents to 5MB). So you might ask, how we manage to index in our frontpage search big datasets that come in “one chunk” like the above?The answer is we first “slice them” per entity.The above mentioned Dataset Pipeline contains a mapreduce job turns a single big dataset into millions of small RDF files containing ‘entity descriptions’ much like what you get resolving a URI tht belongs to a LOD datasets. This way we generated (out of the above datasets) the descriptions for approximately 100 million entities. Which became the 100M documents that went trough the pipeline in well less than one day (on our DERI/Sindice.com11 machine hadoop cluster).A hint of what’s next: all the above in a comprehensible UI.From the standard Sindice search you’ll be able to see what’s updated, what’s not, what’s in sparql, what’s not, what’s in each dataset (with links to each site indepth RDF analytics) and more. You’ll be also able to “request” that a normal website (e.g. your site) be included in the list of those that make it to the Sparql endpoint.Until that happens (likely August) may we recommend you subscribe to the http://sindicetech.com twitter feed. Via SindiceTech, in the next weeks and months, we’ll make availabe selected parts of the Sindice.com infrastructure to developers and enterprises. (e.g. you might have seen our recent SparQLed release)—-AcknowledgmentsDue go to the Kisti institute (Korea) which is now supporting some of the works on Sindice.com and to Openlink for kindly providing Virtuoso Cluster edition, powering our SPARQL endpoint. Thanks also go to the LOD2 and LATC EU projects, to SFI and others which we list here. -

Updates on the Sindice SPARQL Endpoint

SPARQL is really hard to beat as a tool to experiment with data integration across datasets. For this reason we get many request on what data we have in our sparql.sindice.com, frequency of updates, etc.

We admit there was a bit of disappointment as in the past months we were not able to keep up with the promise of having the whole content also available as SPARQL and real time updated. Sorry, it worked under certain conditions but extending those has so far been elusive.

While the technology is steadily improving, we are happy to report recent improvements that should bring back SPARQL.sindice.com as a useful tool in your data explorations.

These changes have to do with what data we load and how often.First of all there are 2 different kinds of datasets living in Sindice

- Websites - these are harvested by the crawlers, and the best ones are those that are connected to a sitemap.xml file or acquired via a custom acquisition scripts (which are possible, just ask us). Websites can be added by anyone, just submit a sitemap and Sindice will start the processing.

- Datasets - these are in general LOD cloud datasets or other notable RDF datasets which are not connected to the Web in a way that is easy for Sindice to crawl and process. Datasets are added manually whenever we get requests.

SPARQL is undoubtedly an hardware intensive business. For the SPARQL endpoint we use 8 machines with 48GB of RAM divided in 2 * 4 machine cluster. Under this configuration, keeping all the content of Sindice (something close to 80 B triples) is not feasible at the moment as it would require at least 2 to 4 times as much hardware.

So we select the data that goes in the SPARQL endpoint as follows:

1) Websites (3 billion triples) are loaded based on the current ranking within Sindice + on a manual list (please ask if you wish a specific website to be added). Current list can be by the way.

2) Datasets (1.5 billion triples) are all loaded in full, see the list below.

Datasets we currently hold in the SPARQL endpoint can be retrieved with the SPARQL query below (sparql.sindice.com) or from the list at the end of this email:

Note that in case of website datasets, we group the data by (second-level) domain. So all the triples coming from the same domain belong to the same website dataset.

prefix dataset: <http://vocab.sindice.net/

dataset/1.0/ > SELECT ?dataset_uri ?dataset_name ?dataset_type ?triples ?snapshot FROM <http://sindice.com/dataspace/default/dataset/index > WHERE { ?dataset_uri dataset:type ?dataset_type ; dataset:name ?dataset_name . OPTIONAL { ?dataset_uri dataset:void ?void_graph ;dataset:snapshot ?snapshot . GRAPH ?G { ?dataset_uri void:triples ?triples . } } } See the results here.

As a result of this new setup and of the new procedures, we believe the reliability of the SPARQL endpoint is now much greater. We look forward to your feedback on this.

What’s next?

Next thing that’s happening is that you will be able to search from the homepage and see directly in the UI what’s in sparql and what’s not. Also you’ll be able to request a dataset (or a website) to be added. Adding datasets will go via moderation but it will be relatively fast.

Also, the SPARQL endpoint at the moment will be updated once a month or on request. In the future we’ll switch to twice a month at least.

Realtime updated results are of course always available via the Sindice regular API (courtesy of Siren)

List of DUMP datasets

dbpedia, triples= 698.420.083

europeana, triples= 127.002.152

cordis, triples= 7.107.144

eures, triples= 7.101.481

ookaboo, triples= 51.075.884

geonames, triples= 114.390.681

worldbank, triples= 370.447.929

omim, triples= 366.247

sider, triples= 18.887.105

goa, triples= 18.952.170

chembl, triples= 133.026.204

ctd, triples= 101.951.745 -

Sig.ma Enterprise Edition (EE) available

The original Sig.ma

The http://sig.ma service was created as a demonstration of live, on the fly Web of Data mashup. Provide a query and Sig.ma will demonstrate how the Web of Data is likely to contain surprising structured information about it (pages that embed RDF, RDFa, Microdata, Microformats)

By using the Sindice search engine Sig.ma allows a person to get a (live) view of what’s on the “Web of Data” about a given topic. For more information see our blog post. For academic use please cite Sig.ma as in [1].

Introducing Sig.ma Enterprise Edition (EE)

We’re happy today to introduce Sig.ma EE , a standalone, deployable, customisable version of Sig.ma.

Sig.ma EE is deployed as a web application and will perform on the fly data integration from both local data source and remote services (including Sindice.com) ; just like Sig.ma but mixing your chosen public and private sources.

Sig.ma EE currently supports the following data providers:

- SPARQL endpoints (Virtuoso, 4store )

- YBoss + the Web (Uses YBoss to search then Any23 to get the data)

- Sindice (with optionally the ability to use Sindice Cache API for fast parallel data collection)

It is very easy to customise visually and to implement new custom data providers (e.g. for your relational or CMS data) by following documentation provided with Sig.ma EE.

Want to give it a quick try? Sig.ma EE now also powers the service on the http://sig.ma homepage so you can add a custom datasource (e.g. your publicly available SPARQL endpoint) directly from the “Options” menu you get after you search for something. It is very easy to implement new custom data source (e.g. for your relational or web CMS data) by implementing interfaces following the examples.

Sig.ma EE is open source and available for download. The standard license under which Sig.ma EE is distributed is the GNU Affero General Public License, version 3. Sig.ma EE is also available under a commercial licence.

Links

- Download Page

- Source Code

- Documentation page.

If you would like to share any feedback with our team, at this point please use the sindice-dev google group.

[1] Giovanni Tummarello, Richard Cyganiak, Michele Catasta, Szymon Danielczyk, Renaud Delbru, Stefan Decker “Sig.ma: Live views on the Web of Data”, Journal of Web Semantics: Science, Services and Agents on the World Wide Web - Volume 8, Issue 4, November, Pages 355-364

-

Sindice reindexed: find your datasets (much faster)

Having streamlined several procedures inside Sindice, rebuilding the sindice index from scratch now takes just a few hours.

Over the weekend, we built a new Sindice index based on the latest updates of Siren and improvements to the pipelines. This is now in production and sports the following enhancements:

Ranking

- no more big docs first (sorry guys, this was an issue in the last weeks)

- properties are weighted differently

Preprocessing

- Improved support of encoded URIS: Decode encoded characters in URIs prior to indexing. The two versions of the URIs, the decoded and encoded one, are indexed. As a consequence, if you look for an URI (or keyword) with any special characters (e.g., Knud_Möller), this will match also encoded URIs (e.g., http://dblp.l3s.de/d2r/resource/authors/Knud_M%C3%B6ller).

- Improved tokenisation of URI localname: Previously an URI localname was tokenised, but the non-tokenised version of the localname was not indexed. For example, given the URI http://rdf.data-vocabulary.org/#startDate, we now index as part of the local name: start, date, and startDate (the later one was not possible previously).

- Improved support of mailto URI: Previously the URI mailtotest@test.com was improperly tokenised and indexed. Now you can search either for mailto:test@test.com or test@test.com. Both will match the mailto URI.

Query Language and processing

- Improved support of special character in URI. E.g. previously, a tilde ‘~’ in a URI was invalidating the query.

- Various bug fixes as reported by users.

- Improved query processing for Ntriple Query. Huge performance benefits in certain situations.

Index Data Structure

- Improved index compression.

These are the technicals.

In practice the thing I love the most is that my favorite queries (the group by dataset ones = open web dataset finding queries) now work easily 10 times faster :).

As always, follow the development of our core open source Semantic Information Retrieval Engine SIREn on github:

-

Searching infinite amounts of Web Data: The new Sindice Index and Frontend

Several goals have kept the the Sindice Team constantly busy in the past year or so. Luckly we’re now getting close to their deployment and today we’re happy to begin by introducing SIREn, the new Sindice core index, its supporting new frontend and the API.SIREn: Sindice’s own semantic search engine

SIREn (Semantic Information Retrieval Engine, SIREn) is the new engine serving queries on our frontend and API. The difference between SIREn and any of your well known RDF databases is that it uses the same principles found in web search engines rather than techniques derived by the database world, namely it is an Information Retrieval based engine.

Typically, information retrieval is associated to full text queries, and this is supported by SIREn too. On top of this, however, SIREn adds support for certain structured queries, allowing for example to look for documents that contain specific triple patters or text within only given properties.

So, while on the one hand SIREn does not implement full SPARQL, and never will, likely, on the other you get benefits which are highly appealing for a search engine:- Theoretically Infinite scalability in a cluster setting - very low hardware demand per amounts of data indexed (as compared to RDF databases)

- Fast, incremental indexing. No slowdowns as data size grows.

- Advanced ranking of query results, top K

On top of this SIREn is not programmed from scratch, rather it is built as a plug in of Apache Solr, thus inheriting all the enterprise strength features its provides.

To give you an idea of the hardware requirements to index an excess of 20b triples, it all fits in 1 master server machine - which we then split in 2 for query throughput and duplicated in master slave configuration for service smoothness during updates.Keeping in mind that we’re talking about very different query capabilities, we can report that in terms of performance, a single machine with SIREn can index 20b triples in 1 day on 2 machines. As a term of comparison, without getting into precise details, let us assure you that this - at the moment - is 4 to 6 times faster than what we have practically seen achieve with the best DB related technologies at hand, running on twice as much hardware - while indexing half the data (10B).

SIREn is Open Source, released under Apache licence. Scientific details please refer to “R. Delbru, S. Campinas, G. Tummarello. Searching Web Data: an Entity Retrieval and High-Performance Indexing Model.” To appear in Journal of Web Semantics.

TestDriving SIREn in action: the new sindice frontend and API

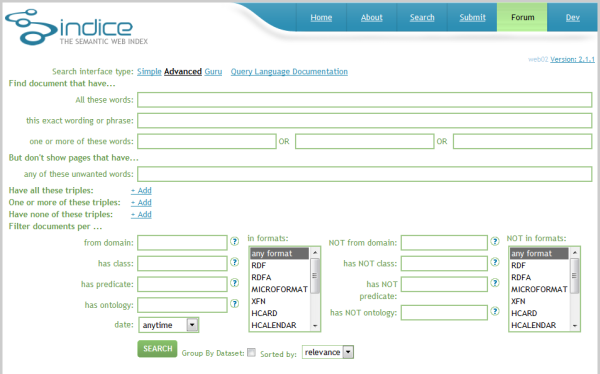

The best way to see in action (most of) the features of SIREn is going for a test drive with the new sindice front end, serving queries on the live updated Sindice dataset (230M RDF documents at the time of writing), e.g. from the “advanced” view:

From here you can create complex queries. e.g. combining keyword queries with more advanced queries such as those requiring certain triple patterns (e.g. <s> <p> <o> → * foaf:knows http://g1o.net/foaf.rdf#me) or having other requisites such as domain name, format, data etc. The full documentation is available online.

In this release we then also have:

- Results filters, which show on the left in the result pages, to allow quick query refinement.

- Group by dataset - this is a first version. It turns document search into a dataset search, showing you how many results grouped per second level domain (often equivalent with dataset) . For example, you are looking for datasets containing events, you can find out that provides more than 700K documents containing event-related information.

- An improved ranking for search results. The main ranking logic is based on the TF-IDF methodology. However, we have added some particular rules under the hood to boost certain results. If your keywords is matching the url of the document, its label, or one of the class or property URIs, the document will be ranked higher.

- Better support for multi-lingual characters (based on UAX#29 Unicode Text Segmentation, thanks to Lucene) and diacritical marks. With respect to diacritical marks, you can now search for either ‘café’ or ‘cafe’. Sindice will return results for documents about either “café” or “cafe”. In the first case (‘café’), results for documents containing ‘café’ will be ranked higher.

To build applications on top of Sindice, the entire capabilities of SIREn are available via a new API (v2).

Also, if you’re around SemTech this July, you can talk to us directly at our presentations, one indeed about Siren and the other where we’ll give a sneak peak to Sindice Site Services - a cool service still in alpha.

-

Sindice migration

This is mainly a test post to verify that the Sindice blog continues to work after migrating it to a new server. But it is also a good opportunity to briefly mention the upgrades we are making to the Sindice infrastructure. I’m happy to report that Sindice has been suffering from some growing pains over the last while - as a result of this, we are in the process of migrating to an improved infrastructure increasing both the capacity of individuals servers and introducing failover support for critical parts of the infrastructure. These measures combined, should, over the coming months significantly increase the amount of data we can both fetch from the web of data and, in turn, process, transform and publish back to the semantic web community. Watch this space for more information over the next few months on our expanding capabilities.

-

Sindice now supports Efficient Data discovery and Sync

So far semantic web search engines and semantic aggregation services have been inserting datasets by hand or have been based on “random walk” like crawls with no data completeness or freshness guarantees.

After quite some work, we are happy to announce that Sindice is now supporting effective large scale data acquisition with *efficient syncing* capabilities based on already existing standards (a specific use of the sitemap protocol).

For example if you publish 300000 products using RDFa or whatever you want to use (microformats, 303s etc), by making sure you comply to the proposed method, Sindice will now guarantee you

a) to crawl your dataset completely (might take some time since we do this “politely”)

b) ..but only crawl you once and then get just the updated URLs on a daily bases! (so timely data update guarantee)

So this is not “Crawling” anymore, but rather a live “DB like” connection between remote, diverse dataset all based on http. in our opinion this is a *very* important step forward for semantic web data aggregation infrastructures.

The specification we support (and how to make sure you’re being properly indexed) are published here (pretty simple stuff actually!)

http://sindice.com/developers/publishing

and results can be seen from websites which are already implementing these (you might be already doing that indeed without knowing..)

http://sindice.com/search?q=domain:www.scribd.com+date:last_week&qt=term

Why not make sure that your site can be effectively kept in sync today?

As always we look forward for comments, suggestions and ideas on how to serve better your data needs (e.g. yes, we’ll also support Openlink dataset sync proposal once the specs are finalized). Feel free to ask specific questions about this or any other Sindice related issue on our dev forum http://sindice.com/main/forum

Giovanni,

on behalf of the team http://sindice.com/main/about. Special credits for this to Tamas Benko and Robert Fuller.

p.s. we’re still interested in hiring selected researchers and developers

-

Sindice planned downtime this weekend

Hi. Due to an expansion of one of our datacentres (and the electrical work that this implies), Sindice and related services such as sig.ma will be down from 1730 GMT+1, 11-Jun (Friday) to 1730 GMT+1, 12-Jun (Saturday). This major upgrade will give us increased room to grow the Sindice infrastructure over time. On 27-May we hit a major milestone in Sindice of having indexed 100 million documents (over 6.5 billion triples) from the semantic web. At current rates of data acquisition, we expect to hit the 200 million document mark before Christmas - so we’ll need that extra room!

If you have any further queries on this downtime, please leave a comment or contact us via the Sindice Developers group.

Update: Thanks to the efforts of NUIG’s ISS team sindice.com is back online ahead of schedule. All services (including sig.ma should now be operational).

About this blog

In this blog you'll find announcements related to Sindice project, as well as news about Semantic Web topics or technical issues strictly related to the search engine.

Recent Posts

Categories

- Announcements (8)

- Blogroll (1)

- Sigma (2)

- Sindice (27)

- Uncategorized (2)