New: Inspector, Full Cache API - all with Online Data Reasoning

We’re happy to release today 2 distinct yet interplaying features in Sindice: The Sindice Inspector and the Sindice Cache API (both including Sindice’s Online Data Reasoning).

A) Sindice Inspector

- Takes anything with structured data on (RDF, RDFa, Microformats), and provides several handy ways:

- in a “Sigma” based view

- a novel card/frame based view

- a SVG based interactive graph view (a la google map)

- sortable triples, with prettyprint namespace support

- full ontology tree view for Online Data Reasoning debugging

- Does live Online Data Reasoning: allows a data publisher to see which ontologies are implicitly or explicitly (directly or indirectly, via other ontologies) and

- visualizes the full closure of inferred statements using different colors.

- provides a tree of the ontologies in use and their dependencies.

Ways to use it:

- a tool from Sindice.com (the inspect tab on the homepage) or the Inspector Homepage

- a Bookmarklet (drag it to your bookmark bar and use while browsing)

- an API either raw (Any23 output, no reasoning) or with reasoning

- to send links to structured data files around, every visualization has its own permalink.

Examples:

- Sortable Triples with Reasoning Closure (try)

- Ontology Import Tree of Axel Polleres’s DERI foaf file (try notice how the GEO ontology and the DCTerms elements are only imported indirectly via other ontologies but yet contribute to the reasoning)

- Graph of the RDF representation of Axel’s Facebook public profile (from microformats) (try).

- All the data in a eventful.com page (try)

B) Sindice Cache API

Tired of your favorite data being offline now and then? convinced that you can’t really do a linked data application without any network safety net? rejoice :-). With the Sindice Cache API you can:

- access and retrieve any of the currently 64 million RDF sources in Sindice with a simple REST api;

- access and retrieve the full set of inferred triples created by Online Data Reasoning (instant access to the precomputed closure, 0 wait time);

- visualize the cache with the same handy tools as available in the inspector. Just try from any Sindice result page.

Feel free to use Sindice Cache with reasoning as a fallback service when data is not available and as a way to add full recursive ontology importing + reasoning support to your application (with none of the massive pain associated with the full procedure). A document you need is not in the cache? just ping the URL in and it will be available within minutes;

———————————-

But what is Online Data Reasoning exactly? Background and implementation

When RDF/RDFa data is put out there on the web, the “explicit” information given in the data is just part of the story. Reasoning is a process by which the vocabulary used in the data (Ontologies) are analyzed to extract new pieces of information (otherwise implicit) - e.g. giving a “date of birth” to an “agent” would makes that a “person”.

Typically, Semantic Web software have manually imported the ontologies they needed. If ontologies are published using W3C best practices, however, it becomes possible to do the entire process automatically: ontological properties can be resolved (i.e. as they are resolvable URIs, they are HTTP fetched) and therefore all can be imported automatically …

… but ontologies can import other ontologies, and form circles and so on.

Implementing the process efficiently (with maximal reuse of previous results across files), scalable (with algorithms and frameworks based on Hadoop) and correctly (e.g. no “contamination” of reasoning results between different data files) was not - believe me - an easy task.

We invested in it, however, since we believe it is important to answer queries based also on the “implicit” part of the document: if not else, it allows the markup on web pages to be considerably more concise.

A glimpse behind the curtain

The implementation of the large scale reasoning pipeline is based on [1] and engineered on top of the Hadoop platform.

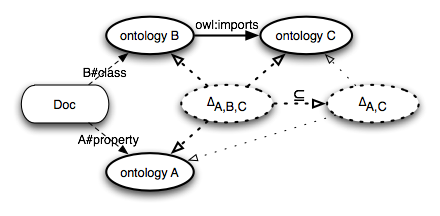

Ontologies in RDF/RDFa documents (but also in microformats which have been converted to RDF in some form) can be “included” either explicitly with owl:imports declarations or implicitly by using property and class URIs that link directly to the data describing the ontology itself. As ontologies might refer to other ontologies, the import process then needs to be recursively iterated until the dependency graph of ontologies is complete. For example, in Fig. 1 is shown the dependency graph of ontologies of a document Doc. The document imports a first ontology, A, through the use of the property A#property and a second ontology, B, through the use of the class B#class. In addition, the ontology B is importing with the use of an owl:imports assertion the ontology C.

Fig. 1: A document and its dependency graph of ontologies (in bold) materialised. The dashed circles represent inferred ontological assertions in their own context.

In general, for each document one would have to recursively fetch the ontologies, create a model composed by these the original document and only at this point computing the deductive closure.

Clearly, doing this in isolation for each of the file is bound to be very time consuming and in general inefficient since a lot of processing time will be used to recalculate deductions which could be instead reused for possibly large classes of other documents during the indexing procedure. To reuse previous inference results, a simple strategy has been traditionally to put several (if not all) the ontologies together compute and reuse their deductive closures across all the documents to be indexed. While this simple approach is computationally convenient, it turns out to be sometimes inappropriate, since data publishers can reuse or extend ontology terms with divergent points of view.

For example, if an ontology other than the FOAF vocabulary itself extends foaf:name as an inverse functional property (i.e. transform the name as a primary key), an inferencing agent should not consider this axiom outside the scope of the document that references this particular ontology. Doing so would severely decrease the precision of semantic querying, by diluting the results with many false positives. For this reason, a fundamental requirement of the procedure that we developed has been to confine ontological assertions and reasoning tasks into contexts in order to track provenance of inference results. Coming back to Fig. 1, the inferred ontological assertions are stored in “virtual contexts” (dashed circles), and the dashed links symbolise the origin of the inferred assertions, i.e. the set of ontologies that lead to such assertions. By tracking the provenance of each single assertion, we are able to restrict inference to a particular context and prevent one ontology to alter the semantics of other ontologies on a global scale.

This context-dependent Online Data Reasoning mechanism allows Sindice to avoid the deduction of undesirable assertions in documents, a common risk when working with the Web of Data. However, this context mechanism does not restrict the freedom of expression of data publisher. Data publisher are still allowed to reuse and extend ontologies in any manner, but the consequences of their modifications will be confined in their own context, i.e. their published documents, and will not alter the intended semantics of the other documents on the Web.

When all the fragments of data, ontologies and partial reasoning results have been identified, these are all put inside instances of OntoText OWLIM where the actual final inference occurs to produce the triples which will then be indexed. Big thanks to all at Ontotext for supporting us with issues when we encountered them.

The technique presented here is mainly focused on the TBox level (ontology level), since it considers only import relations between ontologies as dependency relationships. But the context-dependent reasoning mechanism can be extended to the ABox level. Instead of following import relations, it would be possible for example follow relations such as owl:sameAs between instances.

A bit about the performance

from Robert Fuller [DERI]

subject Re: do we have numbers about the latest implementation of the reasoner?

Hi,

Based on the current running of dump splitter, which is processing dbpedia.org

Yesterday we processed 2.5million documents which works out to (just under) 30 per second across 3 hadoop nodes, so that’s 10 per second on each node. I think there are 4 concurrent jobs running on each node, which works out for a single document 400ms to apply reasoning and update the index and hbase.

I hope this helps.

Rob.

It does indeed.

Credits:

Reasoning Services and methodology [1] - Renaud Delbru, Michele Catasta, Robert Fuller

Data extraction - Any23 library - http://code.google.com/p/any23/ - Richard Cyganiak, Jurgen Umbrich, Michele Catasta

User interface and frontend programming - Szymon Danielczyk

The Card/Frame and SVG visualization courtesy of http://rhizomik.net/ - thanks especially to Roberto Garcia who supported us during his visit this summer with his wife Rosa and little Victor ![]() hi guys.

hi guys.

[1] R. Delbru, A. Polleres, G. Tummarello and S. Decker. Context Dependent Reasoning for Semantic Documents in Sindice. In Proceedings of the 4th International Workshop on Scalable Semantic Web Knowledge Base Systems (SSWS). Kalrsruhe, Germany.

One comment

About this blog

In this blog you'll find announcements related to Sindice project, as well as news about Semantic Web topics or technical issues strictly related to the search engine.

Recent Posts

Categories

- Announcements (8)

- Blogroll (1)

- Sigma (2)

- Sindice (27)

- Uncategorized (2)

Thanks for the ACK

And congrats to all the team that has made these nice tools possible. It is a pleasure to collaborate with you.